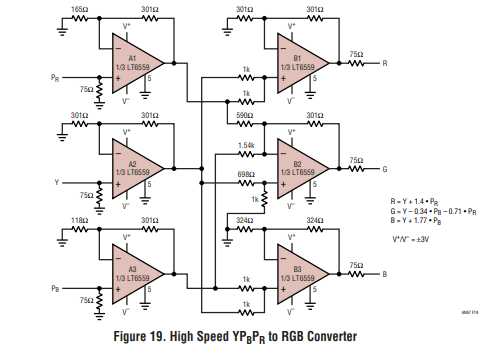

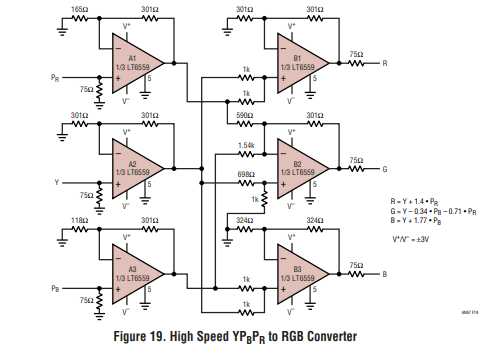

No you’re right, it’s better to have RGB inside the FGPA, as it will be easier to work with, however, I don’t know if it’s better to acquire Component with the FPGA and then do the colorspace conversion to RGB in digital, or do the math with op amp in the analog realm and then digitize the converted RGB. I’ve got a small module that I’ll release soon that does Component to RGB conversion in analog, I’ll publish the schematic online but it’s greatly inspired from Linear Tech AN57

it just requires some precises resistor values to do the math, but nothing super elaborate, digital conversion might be more precise without the need of 6 hi-speed op amps + passives.

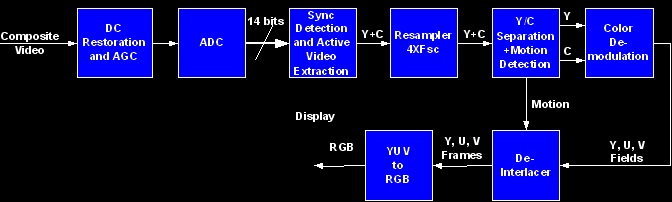

Component is useful in analog cause syncs are embedded in Y and it can be used for luminance based modulation, and it is easily converted to RGB as mentionned before, probably makes less sense in a digital system though, as the clock of the system will probably be derived from the composite signal sync with a PLL and VCXO (as it is done in Cadet Sync Generator), so we only need to sample the active part of the signal.

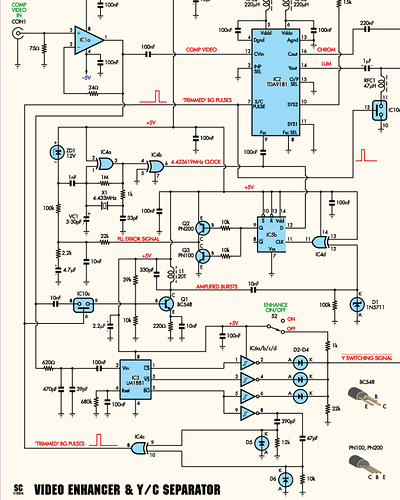

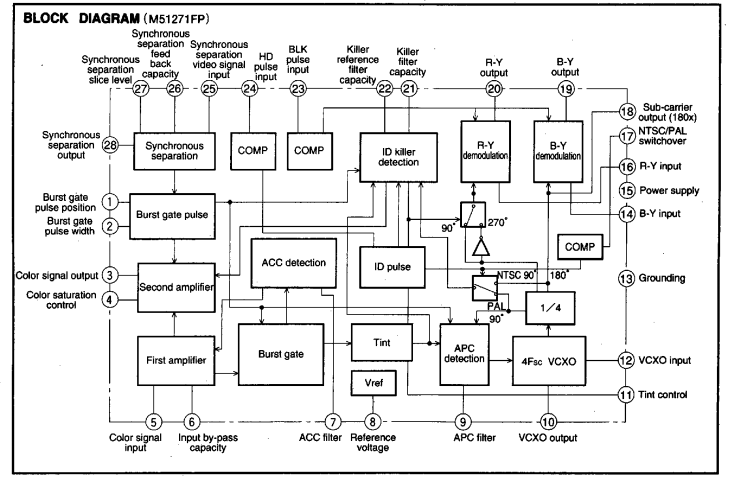

The only thing I’m not sure about on the analog path is the subcarrier genlock, as I wasn’t able to find a VCXO at subcarrier frequency, and it will probably require 2 of them (3.58MHz/4.43MHz), then it can also be done with a crystal oscillator as it is done here.

The full composite video signal is going into the 10nF that is tied to IC10c switch, controlled by the burst output of LM1881, so the switch only opens during burst, which let’s only the reference subcarrier through, then Q1 and associated components amplify the signal a lot to get a square signal. Then amplified bursts are phase compared to the signal generated by X1, and the output (PLL error signal) is used to compensate for this phase difference until both are in phase. Not sure how it will be adapted to NTSC, would probably require a different filter to amplify bursts and a different crystal, I think that’s what the Kramer digital YC separator I’ve posted earlier does: the front NTSC/PAL switch turns switches on and off to get the proper frequency depending on what is required, autodetection of the standard would be fancier

About re-encoding back to analog afterwards, I was also thinking of doing DAC + RGB encoder, but figure out that it would be a little cheaper to get something like ADV7391, as it will take care of the digital to analog conversion and analog format conversion, only thing is that it can only output one format at a time (either composite, YC or component set through I2C) while going the DAC + analog RGB encoder would probably allow for simultaneous composite/YC/component out without requiring to be set up with I2C.

About non-standard/glitch signals, since the composite to component conversion (analog or digital) heavily rely on proper sync/color ref, I don’t really know how “flexible” it can be, cause from what I get, you need a proper signal at some point to derive all the clocks used by the analog and digital part, so I guess it requires to buffer the signal to be able to keep a portion that is in-spec, so everything continue to run despite the sync or burst being corrupted. It seems that a framebuffer is what’s makes the difference between capture cards that handles glitch well and the ones which does not, also depends of what the designer considered to be an in-spec/out-of-spec signal I suppose.

Anyway, I’ll see what other helpful schematic I can find about analog decoding, looks rather complex but surely doable.

In the case of the ADV chip I’ve tested, I2C isn’t really optional it seems: the chip is turned off by default and needs to be turned on through I2C, same for the clock oscillator (I replaced it at first cause I thought it wasn’t working as nothing was showing on the scope), as long as some “ADI special writes” that are registers that needs to be edited but are not really explained in the datasheet. The idea i had behind the board with the decoder/encoder chip was a converter first (to go from Composite/YC/Component to Composite/YC/Component and also PAL/NTSC conversion), so here it would require to be able to edit the parameters through I2C continuously (at least when a setting is edited).

)

)