here is a topic and category maybe? and also just curious whats ppls up to!

i’m working on this jetson nano based video mixer project. trying to reconfigure my approaches to the c++ plus shaders based video projects to allow for more modularity both on my back end design things and also for users. my goal is to have a basic easily scalable video mixer for this platform that can be reconfigured to mix from a variety of potential inputs (jetson nano allows for usb3 and usb2 camera/capture options, plus has 2 csi ports plus can take in pci cards for expansion, so potential input sources range from old school usb cameras/ez cap dongles to this crazy board expansion that does 4 simultaneous sd analog video inputs). trying to rethink the basic concept of video mixing as well to allow for like interesting cross modulation between video sources (maybe like grabbing hsb/rgb from one channel and remapping in a modular fashion to hsb/rgb on another channel? or maybe even like exploring other kinds of color spaces for remapping as well? thinking about the hearn videolab as a potential starting off point for nonlinear styled matrix mixing between sources. also this platform allows for gl 4.6 (i’ve been writing in like gl 1.2 and/or whichever esgl the raspi is up in pretty much ever since i learned how to gl) so that means i get to finally play with the floatBitsToInt functions and try to experiment with bitwise cross modding and chaotic bitflipping biz on the shaders which i used to have a lot of fun with in Processing zones). but yeah i want to use this zone as a testing ground for more modularity in how i’m running the shaders with c++ and test out some ideas i had wrt using shaders as sort of a vst for video so I can make it easy for both myself and for users to write their own custom shaders for mixing and/or effects and just drop them in the signal path without too much hassle. eventually want to bring this modularity and vst style approach back in the the desktop video_waaaves set up because its getting too large and tangly and is becoming exponentially more difficult to troubleshoot and update stuffs over there, not to mention how off putting it is for folks who want to come in and mod it. I also just think it would be super rad if like literally anyone out there was working on a DIY style video mixer that didn’t cost 2000usd+ and wasn’t aimed directly at broadcast or video game streaming and also could literally fit in someones hand

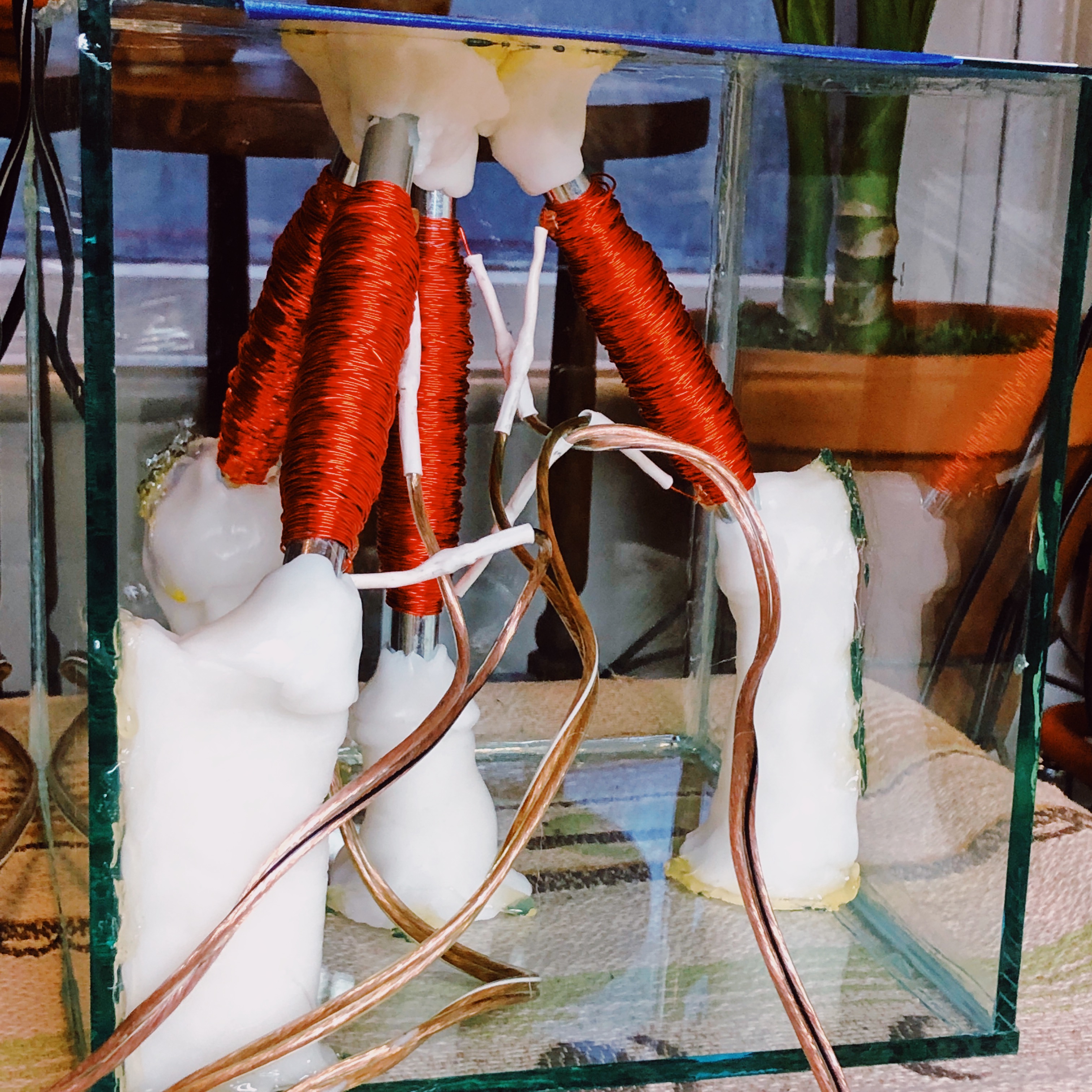

I’ve been working on programming patches to control home made electromagnetic coils, to create patterns in ferrofluid.

@andrei_jay - it is interesting , not sure if you have seen how shaders are implemented in recur, but here i basically went for full user modularity at the expense of usability / simplicity, or at least a steeper learning curve…

this is always hard part about designing technical things - i like it in wavepools for example how users dont have to be thinking about shaders , but more about about the underlying actions they want to see - recur doesnt allow this abstraction…

@palomakop super cool ! how are you programming patches ? i guess you send them amplified audio signals ? oof seeing those hand-wound coils giving me flashbacks to a long and painful day winding 12 coils for a tv-wall installation years ago haha

i am in the habit of working on too many things at once. it is nice though when you get stuck or bored or critical-parts-are-held-in-customs-after-waiting-months-from-china-and-then-returned-to-sender to shelf one thing and pick up something else.

lately i have been playing with this idea: what could an accessible/beginner-friendly diy digital instrument for performing vectors be like? in similar vein to how recur is for performing video…

its tricky though because not everyone has old oscilloscopes lying around in the same way old tvs tend to. making some progress on this front tho

@cyberboy666 whoa, the scope emulation thing looks great.

people seem to really like the oscilloscope graphic artist which just very simple oscillators, so it seems like small vector generation environments would be welcomed.

for my coils, yes i’m sending them voltage from audio amps, though i’m sending them mostly around 0-2 hertz. generating the signals in max msp.

oh yeah i’ve got one of those vector synth things cooking up for the rpi too! trying to come at it from the perspective of being both a standalone thing that folks could pipe “vectors” out into either a scope or out via hdmi/composite outputs as well. been trying to make it self contained tho with its own ‘audio’ engine thats designed just around having a lot of video control vs needing to care about anything sounding like ‘musical’ or whatever so folks don’t need to also dig into like pure data or have a fuck ton of modular gear around for the audio side of things too. audio side of things is taking a bit of time i’m working with this basic synthesis engine i whipped up in oF a while ago based mainly on the idea that everything in the audio part of things is just oscillators that can go from sub to supra audio ranges and a purely functional approach to synthesis so just fm/pm and rm/am. so like all the pattern generation is pretty much just like osc(theta*osc+osc)*osc kind of stuff. also want to have the option for like the same thing to be going out thru like a hifi berry at the 192k for like the super crisp lines.

and yeah the recur approach is partly what i’m going for, im pretty interested in trying to figure out more of this functionality in gl4 tho

i had issues with performance in most of my previous attempts to implement multiple shader runs in my stuffs plus like all the branching involved in trying to set up a 1 pass shader that bundled a bunch of different but it kind of looks like subroutines could clean up a lot of the issues i had with both of those things…

ive been working on something similar which is taking the shape of a sort of visual coding env. so far its just been a way to consolidate disparate bits of c++ written for different projects / artworks over the years, and also de-couple this from an always-unfinished gui project (that’s been rewritten twice!). the result is close to a hybrid vj program and maxmsp (though much simpler).

im focussing on io and hardware right now, but curious about how gl shaders can be abstracted or cracked open into a node structure, as not played with this (vvvv, hydra, touch designer). theres an amazing project Manolo Naon showed us at the last video circuits berlin - i met him at a bar and when we got talking about video circuits he whipped out his laptop and starting hooking together all these oscillators and colorisers! sadly though i don’t think this will see the light of day

the simplicity of the isf spec i find nice due to limited gl experience, so would probably approach gl from the perspective of how isf works and could be improved - for example, a slightly abstracted gl language that compiles to different versions / flavours. for more complicated recursion, the problem as i understand it is trying to work out how to inject something into the shader flow with multiple passes - with hydra handling this by compiling a single shader to the gpu.

at the moment im mostly busying myself working out how to most efficiently run updates and threads. the core its roughly three layers: base lib of components that are methodless (ie. only ever mutating bools / floats / ints rather than calling funcs), a layer which standardises I/O nodes (passing vects of floats, textures, sound buffers) and a gui layer for connecting it all together.

for sound processing ive been using ofxsoundobjects and would recommend contributing to that (theres an ofxaudioanalyzer node in my fork which is being used for a music video right now)

bit pulled-in-all-directions during this pandemic but hopefully will have more time to work on it all soon!

Blockquote @andrei_jay have you seen this? ive not looked into it yet but seems promising: Audio Injector

haha yes i have, its on my shopping list to mess with once i finish up a couple other projects and have some better default projects set up for audio on the pi! was originally looking for something with just 4 outputs so i could play around with XYZ display controllers from the pi but if i’m gonna mess around with multi channel out i might as well make the whole thing modular and scalable as well, theres a lot of potential for that. also cautiously interested in that for the simultanous audio visual synth stuffs i play with and abandon about 1nce per year

Been researching a lot about composite video sync generators these day.

Started by looking how it was done prior to all-digital solutions, like on the first Atari Arcade games where everything was done with CMOS, mostly progressive sync which is rather simple to implement with basic logic.

Found a few schematic of interlaced sync generators done with logic only, but it was rather complex to adapt it to generate both composite video sync standard.

So this made me pull the trigger to dig more into FPGA, as those are perfect for this task. I made a vhdl code for a simple PAL/NTSC composite sync generator based on a Xilinx Coolrunner II (XC2C64), still lacking of proper resets for genlocking and such, but will be happy to share it when it is a bit more elaborated.

I really like the simulation environment of FPGAs, being able to see what’s happening at each clock cycle is super handy for testing code, I was trying to do a similar thing with an arduino but had to compile and hook it up to a scope at each modification so I could check what was happening on a precise line of the video signal, tried also through the serial monitor but it’s hard to check precise stuff imo.

Anyway, I might start a dedicated thread about video sync generation as it is a broad subject.

Else I’ve been documenting myself a lot on Y/C separation (basically composite to s-video), mainly cause having both luminance and chrominance separated from a composite signal makes it easier to process in analog (and also in digital of course, as it is required to convert the source signal to a RGB/YUV colorspace). I’ve tested a few simple analog filters, which didn’t worked super well, the main issue being that there is still some chrominance in luminance, so some monitors picks up colors even if the level is low, which doesn’t allow for a true b&w image. There were specialized digital comb filters ICs that were made when analog video was still prevalent in mass market, which were analog in/analog out, but most are obsolete and going for a digital solution, better look at what is still made.

From what I’ve found, the comb filters are now fully integrated in video decoder chips as the ADV ones from Analog Devices, which also take care of converting it to digital, which would mean that in order to have an analog YC output, it would require a video encoder too (and a micro or something to set parameters of both ICs in i2c).

@andrei_jay super interested in your video mixer project! Reading video decoder datasheets made me think about how feasible a DIY video mixer would be recently too, but yeah the whole syncing/processing signals in the digital realm is way over my head currently

Just checked the jetson nano, looks like it can sync the two video inputs, that’s really nice.

@palomakop I’ve watched your latest work involving ferrofluids, it’s brilliant, the control you have over it makes it look like it’s a living thing, super cool to see some “behind the scene” picture.

@cyberboy666 awesome work!! As we previously discussed, including some kind of stroke to raster scan conversion could be nice for those who don’t have an analog scope.

@autr eager to see more, I really like visual coding environment, I’m not super “code literate” so this helps a lot! That’s also what I liked with FPGAs, as it is possible to program them only using logic building blocks, started learning really basic vhdl in the end as I didn’t manage to make what I want with the schematic part, but this sure helps to understand bit more how it works.

I hope all of these posts get their own thread one day - so much good stuff

bumping this thread! also started working on something new this weekend.

here is a silly little demo i just recorded with it to promote the chat lol

@cyberboy666 thank you for this absolute gift.

side note: when i saw the thumbnail, i was like “chat scan … is that like a French cat scan?”

I’m in the (extremely) early stages of putting together some eurorack modules, including a combined sync-generator and ramps module. LZX recently announced a lot of new modules and it looks like they are going to put out a very similar module so I was a bit discouraged but I think I’ll still push ahead with it and see if the market can bear my take on the idea.

text manipulator??

At the moment i work with contour finder and tracking algorithms. The original video material is from CalArts. First in the original video i search manually for sequences that follow the movement of the dancers over several seconds. These sequences are then analyzed by OpenCV and openFrameworks almost in real time and mixed with the original video.

These images are based on a video from CalArts

Dance 2019

Original video: cc by nc 3.0 https://vimeo.com/322918472

Using the optical flow from a video to animate a mesh.

Yes hehe it is/was a text generator and scrabbler.

Was coming along nicely but I got a bit discouraged when I couldn’t figure out how to sync lock it to an external video signal (think text overlay style )

- still it will be fun / useful without that but I wanted it all haha. Will come back to this project and have some more info about it some time this year I hope